The year 2025 is witnessing a remodelling of industries, governments, and everyday life as a consequence of converging Big Data and Cybersecurity Ethics. Though the advancements bear different opportunities, they bring increasingly complex ethical questions that can no longer be swept under the carpet. From privacy intrusions to algorithmic bias, the ethical challenges of this digital age apply philosophy at its furthest possible extent.

Big Data and Cybersecurity Ethics being the new currency, there have never been such pressing ethical issues regarding who controls data, how it is used, and who is accountable for its misuse. These five ethical issues require immediate fixing in 2025. Whether you are a tech professional, a policymaker, or someone just concerned about the issues, being knowledgeable about the problems is integral to responsibly behaving in the frontiers that lie ahead.

The stakes could not be higher; it is never too late to find a way out. The ethical minefield posed by Big Data and Cybersecurity Ethics is something that must be explored immediately.

Ethical Challenge 1: Privacy Invasion and Data Collection

The collection and analysis of personal information in bulk constitutes perhaps the greatest premise under Big Data and Cybersecurity Ethics for innovation. However, the practice threatens to wear thin with the factors of utility and privacy invasion. The ethical implications of Big Data and Cybersecurity Ethics collection are being raised now more than ever as we approach the year 2025.

Privacy Violation Versus Data Utilization

Companies gather data for service improvements, trend predictions, and better user experiences. Data-driven insights have now brought change, especially in the areas of healthcare and retail. However, these sobering thoughts arise: Are data users well using such large-scale collection? Most users are completely unaware of how much personal information is gathered, stored, and analyzed, from browsing habits to biometric Big Data and Cybersecurity Ethics. Transparency and consent are two sides of the ethical question. Although there usually exist terms and conditions, they are often quite long, too technical, and frequently ignored, resulting in a power imbalance where Big Data and Cybersecurity Ethics subjects have very limited control over their own data.

Case Studies: Overreach in Data Collection

Social Media Platforms:

These companies, Facebook and Instagram among them, were vilified for data collection without explicit consent, in addition to sharing that data with other third parties for purposes of targeted advertising.

Healthcare:

2023 saw a major healthcare provider disclose sensitive patient Big Data and Cybersecurity Ethics because of poor cybersecurity measures, revealing the risk-over-collection view.

Smart Devices:

Smart home devices, for example, voice assistants, have been accused of listening on conversations unbeknownst to users, thus raising issues of surveillance.

The Ethical Questions

- How much data collection becomes too much?

- Are services aware of what they are consenting to?

- Who owns the data—the individual or the organization collecting it?

The Path Ahead

Confronting these challenges necessitates that organizations remain focused on transparency and user empowerment. Organizations will require clearly articulated privacy policies, robust Big Data and Cybersecurity Ethics protection requirements, and, ideally, systems built on opt-in, not opt-out. Governments and regulators are further required to implement more stringent data privacy laws like the EU GDPR.

From here to 2025, the ethical way to collect Big Data and Cybersecurity Ethics will largely determine the partnership of trust between organizations and individuals. If these matters are ignored, they not only violate people’s right to privacy, but the entire digital ecosystem will suffer from a further loss of confidence among the public.

Ethical Threat 2: Biases and Discriminations

Algorithms are at the heart of data analyses for Big Data and Cybersecurity Ethics in obtaining insights, making predictions, and programming decisions. Algorithms may carry some power but appear not to be free from bias. Generally speaking, they reflect the very prejudices that lie within the training data. By the year 2025, the ethical ramifications of algorithmic bias are now steeped in controversy, primarily because of the discrimination and inequality that such biases sustain.

How Big Data Algorithms Can Reinforce Bias

Algorithms have no value to the quality of Big Data and Cybersecurity Ethics being inputted. Input data bias may arise due to historical inequalities, warped sampling techniques, and plain error on the part of the humans involved. In such cases, it’s likely the algorithm will replicate and even amplify such biases. For instance:

The Recruitment Algorithms:

AI methodologies sustain discrimination in hiring processes, favoring candidates along lines of gender, race, and socioeconomic class in recruitment practices.

The Predictive Policing:

Algorithms in policing often target marginalized communities, thereby perpetuating systemic racism.

Credit Scoring Systems:

Financial algorithms deny loans or credit to individuals against certain demographic backgrounds, reducing their economy opportunities.Such examples show how algorithmic bias leads to unfair outcomes-and disadvantaged categories often bear the brunt.

The Effect on Marginalized Communities

Marginalized communities are equally affected by algorithms that discriminate, such as racial minorities, women, and low-income persons. The consequence is the further entrenching of existing inequalities, coupled with obstacles to amelioration or advancement. For eg.:

Healthcare Algorithms:

Studies indicate that algorithms allocate medical resources by favoring their white patients over Black patients, even if Black patients are more in need of medical care.

Facial Recognition Technology:

This technology has been shown to misrecognize disproportionately dark-skinned citizens, causing wrongful arrest and unwanted surveillance.

The ethical discourse here goes beyond accuracy and focuses on justice and fairness. The discriminatory behavior of the algorithms tears down the very fabric of equality and human rights.

The Ethical Questions

- Who is putting in the effort to ensure fair and unbiased algorithms?

- How do we recognize and mitigate biases from both data and algorithms?

- Should we place restrictions on the places and methods for using algorithms, especially when it is to be done in very sensitive areas like criminal justice or healthcare?

The Way Forward

Tackling algorithmic bias would necessitate employing diverse strategies:

Diverse Data Sets:

Diverse Data Sets. Training Big Data and Cybersecurity Ethics, upholding extreme diversity, representatives of all demographics may mitigate bias.

Algorithmic Audits:

Algorithm Audits. Algorithms are tested in terms of various biases and fairness on a regular basis, which creates a valid opportunity to catch patterns of discrimination.

Transparency and Accountability:

Transparency and accountability were the ruling principles. Organizations should be transparent about their algorithms, while they should be held accountable for their consequences.

Ethical AI Frameworks:

Frameworks for Ethical AI Development. Governments, along with industry, should develop frameworks to ascertain that AI technologies are being developed and put in place with a sense of ethics.

The arrival of 2025 brings with it an important moral imperative: the fight against algorithmic bias is no longer just a technical challenge. Tackling these issues meant ensuring Big Data and Cybersecurity Ethics worked as a tool for inclusion rather than exclusion.

The Ethical Dilemma 3: Surveillance and the Loss of Autonomy

Big Data and Cybersecurity Ethics technology have made the distinction between security and surveillance less and less clear. The usage of surveillance technologies supplemented through Big Data and Cybersecurity Ethics collection and analytics gives way to massive ethical concerns by 2025, in a direction that seriously threatens individual autonomy and freedom.

The Rise of Mass Surveillance Technologies

Now, governments, corporations, and even individuals have a number of powerful surveillance tools, namely:

Surveillance cameras are installed in public places and are very useful in keeping track of people.

Internet Oversight:

Vigilantly keeping tabs on all online activities, including browsing history, social media activities, and private ones, too.

Smart Devices:

Such devices as smartphones, smart speakers, and wearables track an enormous amount of Big Data and Cybersecurity Ethics clandestinely without user interference in giving rise to doubts as to what and how much are being monitored. Whatever may be the case, these are often justified in the name of security and convenience, leaving serious concern regarding ethics related to privacy and freedom.

In the Context of Security and Individual Freedom

The ethical conflict is between public welfare and personal choice. For example:

Government surveillance:

The government surveillance usually involves committing crimes and acts of terrorism, while it can sometimes create the possibility of a surveillance state wherein citizens are observed all the time. Consequently, trust is slowly being eroded, and freedom is compromised.Corporate Surveillance:

Worldwide, employers employ surveillance tools much more now to determine whether employees are being productive or not, with glaring issues related to micromanagement and matters of trust.

At what level does surveillance become too much? When does the quest for security slip into infringing individual rights?

Some Ethical Considerations

• Does mass surveillance qualify if privacy is affected in any way?

• Who gets to determine acceptable levels for surveillance?

• What mechanisms must be in place to prevent the abuse of surveillance technologies by the powerful?

The Impact on Society

The implementation of surveillance technologies on a wider scale may induce a chilling effect that can lead individuals to act in ways that could engender an atmosphere of fear and possible scrutiny. Such atmospheres are antithetical to freedom of expression and personal autonomy, which ought to be guaranteed in a democratic environment. Consider the following examples:

Whistleblowers and Activists:

Surveillance serves as a disincentive to denouncing injustice and corruption.

Marginalized Communities:

Generally targeted more disproportionately by surveillance mechanisms, these communities face additional judgments that would otherwise not be possible if they were in the limelight.

The Way Forward

To address the simultaneous ethical challenges, one has to think about:

Clear Regulations:

Laws must be promulgated by governments to curtail surveillance and refresh individual privacy.

Transparency:

Such organizations using surveillance technologies must practice transparency in what they do and state their arguments.

Public Awareness:

Teaching people about their rights regarding surveillance will further empower them in demanding accountability.

Ethical Design:

Surveillance technology developers should emphasize privacy and autonomy.

In 2025, we will be faced with the problem of keeping the fine balance between security and freedom with the ethical use of surveillance technology. To ignore these concerns would endanger society by putting the individual under constant watch and eroding his free will.

Ethical Challenge 4: Data Breaches and Accountability

Organizations are increasingly under threat due to cyber attacks, Big Data and Cybersecurity Ethics breaches, and similar other activities due to the enormous data storage capacities in the present times. The assault on these breaches is expected to see a surge by the year 2025, resulting in some solemn ethical quandaries concerning data security and accountability.

Protecting Users’ Data from Ethical Responsibility

Organizations that collect and store user Big Data and Cybersecurity Ethics are expected to uphold the moral and legal obligation of ensuring their protection. However, because of various complications regarding the emerging threats in the cyberworld, its prevention has only been made more difficult. The ramifications of a breach can be disastrous:

Financial losses:

Stolen credit card information, bank information, and other financial Big Data and Cybersecurity Ethics can harm individuals financially in a very big way.

Identity theft:

Information such as the Social Security number or address can be used to dupe or impersonate someone.

Reputational damage:

A breach is going to lose customer trust and cause reputational damage for years to come.

The ethical dilemma is trying to make sure that organizations put Big Data and Cybersecurity Ethics security foremost and take the blame if breaches do happen.Who is Accountable When Data is Compromised?

Determining accountability in the event of a data breach is often complex. Key questions include:

- Is the organization at fault for inadequate security measures?

- Are third-party vendors or partners responsible if they were the weak link?

- Should users bear some responsibility for weak passwords or falling victim to phishing attacks?

In many cases, organizations bear the brunt, for they are the ones responsible for user data. However, the lack of a coherent accountability framework leads to finger-pointing and paralyzing action.

Case Studies of High-Profile Data Breaches

- The Equifax data breach is guaranteed to declare unarguably to the 147 million real occupants should ascertain that they report their respective date of birth and Social Security numbers, among others. The organization suffered heavy criticism owing to its slow response to the matter and a complete failure of its security systems.

- The political advertisement used without the consent of millions of Facebook account holders was generated from data harvested for purposes other than intended in use, raising fundamental issues regarding data misusage and accountability.

- This attack was the very first that crippled fuel supply across the U.S. and thus exposed the vulnerability of critical infrastructures toward being breached into.

These scenarios highlight the serious consequences of data breaches and create a perfect avenue for questioning the moral standards that are evidently lacking in the field of cybersecurity. Ethical Questions

What should be the criteria for setting the balance when organizations wish to collect data and be under an obligation to protect it?

What penalties should be levied against the organization for damage to user data?

What kind of mechanisms can be put in place to enforce accountability among disparate partners along complex supply chains?

The way forward

In resolving the ethical dilemmas that arise after a data breach, several strategies may apply:

Upgraded Cybersecurity:

Organizations should invest in modern protection technologies to keep user data secure, including encryption and multifactor authentication.

Regulatory Compliance:

Governments realistically need to enforce heavy penalties on violations and adopt tighter laws on data protection like GDPR.

Transparency and Communication:

Organizations need to notify affected users in a timely manner to limit damage in case of a breach.

User Education:

If individuals are educated about cyber safety practices, risks from human negligence can potentially be reduced.

Ethical Leadership:

Organizations must establish an ethic of accountability, emphasizing data protection on all levels.

In this regard, ethical management of data breaches will be crucial to fostering trust in the digital ecosystem in the years ahead of 2025. An organization must understand that protecting user data is a matter of law and ethics.

Ethical Challenge 5: Misuse of Data for Manipulation

In the present digital context, Big Data and Cybersecurity Ethics typically can be imagined as a double-edged sword: it provides for innovation on one side, but on the other, it allows the ill-minded to rather easily utilize these tools for manipulating opinions, behaviours, and decisions on an unprecedented scale. An emerging issue that must be recognized, it will be a concern by 2025 if data manipulation would have attained global acceptance and rampant use in the context of political, marketing, and social-engineering violations.

The Role of Big Data in Political and Social Manipulation

Big Data and Cybersecurity Ethics analyzes enormous datasets to filter messages and messages hyper-targeted to identify psychological triggers, prejudices, and weaknesses among particular demographics. And this ability has been unleashed as a weapon in the following heinous ways:

Election Interference:

In the 2016 U.S. presidential elections, companies like Cambridge Analytica used Facebook data to micro-target voters with propaganda personalized to the point of leveraging emotional biases and tipping the scales in one direction or the other.

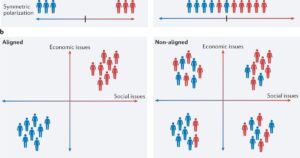

Social Polarization:

Algorithms on platforms like Twitter and YouTube spread divisive content for maximum engagement, further polarizing society.

Disinformation Campaigns:

Using deepfakes or fake news, deliberately planted by artificial intelligence, have been rapidly spread through data-targeting schemes, eroding belief in institutions and media. The ethical dilemma herein remains clear: when does persuasion turn into manipulation? And who gets to set that line in the first place?

Ethical Implications of Targeted Advertising and Propaganda

Targeted advertising is considered a hallmark of modern marketing, of which the ethical limits are becoming uncertain:

Exploitation of Weaknesses:

The data enables companies to target people at times when they are most vulnerable—for example, advertising unhealthy products to people struggling with addictions, or financial services to people in debt.

Psychological Profiling:

Platforms like TikTok and Instagram collect user data to strategically curate addictive feeds with content aimed at stimulating dopamine responses, or simply put, keeping users hooked.

Culture Creep:

Data is utilized by global organizations to disseminate homogenized content, systematically erasing local cultures and customs in favor of profit-raising narratives.

Thus, algorithmic propaganda represents the embodiment of evil—with the means of AI generating content aimed at exploiting individual bias—which constitutes an existential threat to free thought and informed decision-making.

Case Studies: The Dark Side of Data-Driven Influence

- Facebook and Cambridge Analytica exploit the harvesting of personal data from millions without consent to shape profiles and influence their voting behavior.

- Mass Big Data and Cybersecurity Ethics collection is used in surveillance as a reward or punishment for citizens depending on their behavior, thus trying to manipulate social conduct through China’s Social Credit System.

- A famous AI-generated video of a politician misstating in 2023 disrupted elections in Brazil and India. These particular cases highlight how data can easily be weaponized against people and societies.

From these examples, we learn how fast Big Data and Cybersecurity Ethics can be weaponized to manipulate individuals and societies.

The Ethical Questions

- Are there some boundaries that should be set for data use in the manipulation of human behavior?

- Who will regulate the platforms benefitting from these manipulative algorithms?

- How do we prevent interference in the democratic process by data?

- Does a user really consent to data being used if the user does not understand its manipulative power?

The Way Forward: Combating Data Misuse

Transparency with Algorithms:

Have the platforms reveal how algorithms prioritize content and people.

Heavy Restrictions on Microtargeting:

Banning or restricting the use of psychographic profiling in political campaigns and high-risk industries (e.g., healthcare and finance).

Public Awareness Campaigns:

Inform users about data misuse by educating them on recognizing manipulative content.

Ethics in AI Development:

Create AI systems that contain safeguards to resist malicious data applications, such as deepfakes or hate speech amplification.

Global Partnership:

Through the medium of international treaties and cooperation, deal with cross-border instances of data misuse.

The Road Ahead: Addressing Ethical Challenges

As the year 2025 comes closer, these ethical challenges presented by Big Data and Cybersecurity Ethics are no longer just theoretical but rather norms being established in our world. These challenges include violation of privacy and algorithmic bias, putting high stakes against the need for action. The section will treat the way forward, outlining essential strategies for addressing these challenges and setting up a more ethically inclined digital future.

Regulatory Frameworks and Policies for 2025

It is imperative for governments and authorities to monitor the use of technology in Big Data and Cybersecurity Ethics. Some of the first steps include:

Data Protection Laws:

Laws such as the EU’s General Data Protection Regulation (GDPR) and the U.S. California Consumer Privacy Act (CCPA) set significant precedents. These laws should be extended and globally enforced by 2025 to safeguard user data.

Algorithmic Accountability:

Governments must require AI and machine learning systems’ transparency so that organizations must report how algorithms make decisions and how they handle biases.

Cybersecurity Standards:

Minimum security requirements for organizations collecting and storing data can provide a significant deterrent against breaches and avert risks to users.

The Role of Organizations in Promoting Ethical Practices

Data collection and usage by organizations ought, alongside innovation, to process ethics first. The main steps include:

Ethics of Data Acquisition:

Includes adopting practices such as data minimization (that is, collecting only what is necessary) and informed consent (ensuring that users provide consent based on a clear understanding of how their data will be used).

Bias Mitigation:

Regular algorithm bias audits and making sure that diverse datasets are deployed to train the AI system.

Transparency and Accountability:

Being explicit about data practices and taking responsibility for data breaches or other forms of misuse.

For instance, Microsoft and Google have set a standard for others to follow by having their own AI ethics boards provide oversight for the development and deployment of AI technologies.

Empowering Individuals to Protect Their Data

These ethical issues also concern individuals who act upon them. This includes some major steps:

Digital Literacy:

Prevalent education would empower the people on how their data is being collected, used, and protected to make an informed choice.

Privacy Tools:

Encouragement of the use of tools such as VPNs, encrypted messaging apps, and ad blockers that help protect that information.

Advocacy:

Support organizations and initiatives that promote digital rights and ethical data practices.

Collaboration Between Stakeholders

The ethical issues surrounding Big Data and Cybersecurity Ethics require a common effort from various sectors:

Public-Private Partnerships:

Building ethical standards and best practices should be a collaboration between governments, tech companies, and civil society organizations.

International Cooperation:

Because data cross borders, international cooperation is necessary for regulatory harmonization and addressing cross-border issues, including cybercrime and disinformation.

Interdisciplinary Research:

Working with the industry, ethics, law, and social sciences may lead to more holistic problem-solving. Ethical Innovation: Building a Better Future

Technology per se can also act as a solution. Some innovations include:

The technologies that boost privacy (PETs):

The technologies that increase privacy (PETs) include homomorphic encryption and differential privacy, which ensure that no usage of data compromises privacy.

Explainable AI (XAI):

A system’s credibility and accountability are based on how well it can explain itself to human comprehension.

Decentralized Systems:

These negative aspects work toward individuals gaining more control over their data with blockchain and other decentralized technologies.

Conclusion:

The ethical concerns surrounding Big Data and Cybersecurity Ethics are pretty knotty, if not altogether insoluble. We have till 2025 to do this. The onus rests on us to create a digital ecosystem that will respect privacy, equity, and accountability. For this to become a reality, it will require the following:

- Strong leadership from governments and organizations.

- Public awareness and engagement.

- Innovation that aligns with ethical principles.

With the future staring at us, one might say it is difficult to contemplate. Yet, to leave that future unattended is, by far, more dangerous. It is time all stakeholders worked together in creating a future where technology is created for the benefit of mankind, rather than mankind becoming a foe to technology.